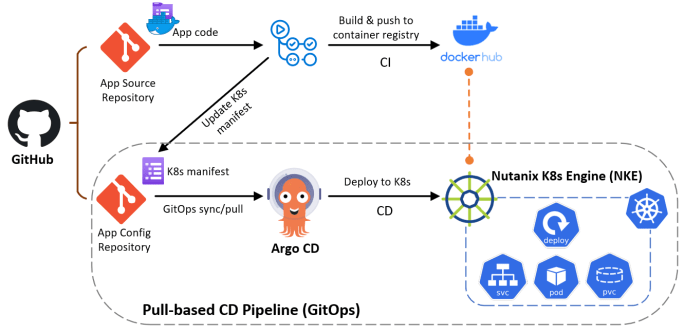

This is the 5th episode of our NKE lab series. In this episode, I will demonstrate how you can easily build a fully-automated GitOps continues delivery (CD) pipeline, by using Github, NKE and Argo CD. GitOps is a operational framework that takes DevOps best practices (such as version control, Infra-as-Code, CI/CD etc), and applies them … Continue reading NKE Lab series – Ep5: Build a GitOps CD pipeline using GitHub, NKE and Argo CD

NKE Lab series – Ep4: Accelerate K8s application development using NKE with Nutanix Database (NDB)

This is the 4th episode of our NKE lab series. Previously, I have demonstrated how you can easily deploy a NKE cluster in a Nutanix CE lab environment, and I have explored some NKE platform features including out-of-the-box CSI and CNI support. In this episode, we'll take a look how you can accelerate Kubernetes application … Continue reading NKE Lab series – Ep4: Accelerate K8s application development using NKE with Nutanix Database (NDB)

NKE lab series – Ep3: Deep dive into NKE networking with Calico CNI

This is the 3rd episode of our NKE lab series. Previously, I have walked through: How to deploy a NKE-enabled Kubernetes cluster in a nested Nutanix CE environment How to provide persistent storage to your NKE clusters using 2x Nutanix CSI options In this episode, we'll deep dive into the NKE networking spaces by exploring … Continue reading NKE lab series – Ep3: Deep dive into NKE networking with Calico CNI

NKE lab series – Ep2: Deploy a multi-tier web application on a NKE cluster using persistent storage with Nutanix CSI

This is the 2nd episode of our NKE lab series. In the 1st episode, I have demonstrated how you can easily deploy an enterprise-grade NKE cluster in a Nutanix CE lab environment with nested virtualization. In this episode, we'll deploy a containerized multi-tier web application onto our NKE cluster, by leveraging the built-in Nutanix CSI driver … Continue reading NKE lab series – Ep2: Deploy a multi-tier web application on a NKE cluster using persistent storage with Nutanix CSI

Nutanix Kubernetes Engine (NKE) lab series – Ep1: Create a NKE-enabled Kubernetes Cluster on Nutanix Community Edition (CE)

This blog is the 1st episode of a Nutanix Kubernetes Engine (NKE) home lab series. In this post, I will walk through the detailed process of deploying an enterprise-ready NKE-enabled Kubernetes cluster within a Nutanix CE environment. Nutanix CE is a free version of Nutanix AOS, which powers the Nutanix Enterprise Cloud Platform. It is … Continue reading Nutanix Kubernetes Engine (NKE) lab series – Ep1: Create a NKE-enabled Kubernetes Cluster on Nutanix Community Edition (CE)